During a break in the game during a small league size match.

Today, 21 July, he saw that the competition was coming in a thrilling final. In the third and finals of our bike articles, we provide the taste of the event from this last day. If you miss you, you will find our first twigs here: July 19 | July 20.

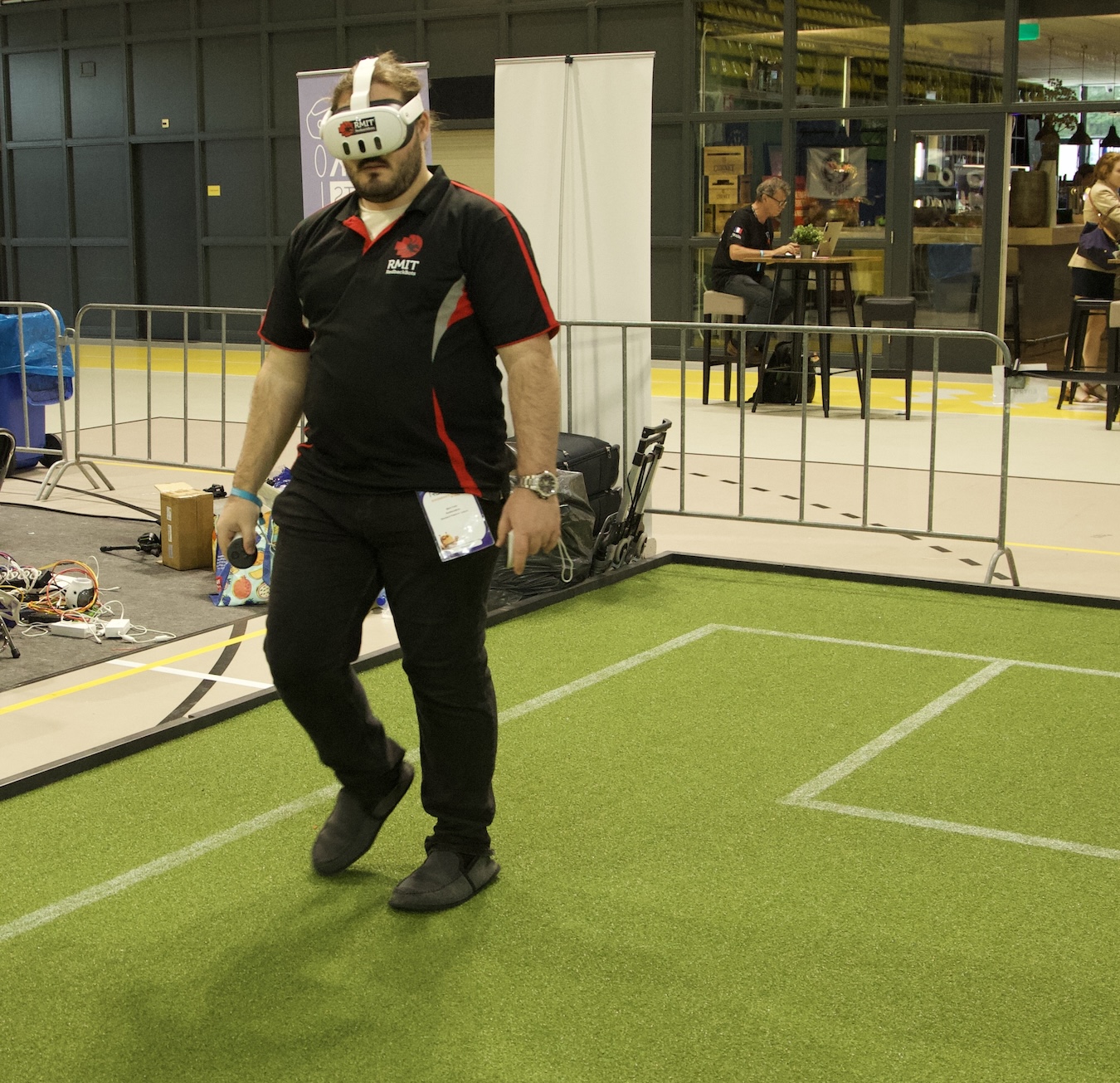

My first port this morning was a standard league platform where Dr. Timothy Wiley and Tom Ellis from Team RedbackBots, Rmit University, Melbourne, Australia, have shown exciting progress that is unique to their team. They developed a system of increased reality (AR) to increase the understanding and explaining of the field of the field.

RedbackBots for 2024 (LR: Murray Owens, Sam Griffiths, Tom Ellis, Dr. Timothy Wiley, Mark Field, Jasper Avice Demay). Credit photo: Dr. Timothy Wiley.

RedbackBots for 2024 (LR: Murray Owens, Sam Griffiths, Tom Ellis, Dr. Timothy Wiley, Mark Field, Jasper Avice Demay). Credit photo: Dr. Timothy Wiley.

Timothy, the academic leader of the team, explained: “What our students designed at the end of the last Yaara competition to contribute to the league was to develop the visualization of increased reality (AR) what League calls Team communication monitor. It is a piece of software that appears on the TV screens to the audience and the referee and shows you where the robots think that the game is information and where the ball is. We set out to create the AR system, because we think it is much better to see how it is covered in the field. AR allows us all this information to live in the field because the robots move. ”

The team was shown in the league system for an event with very positive feedback. In fact, one of the team found a mistake in their software during the game when testing the AR system. Tom said they were receiving a lot of ideas and designs from the second Arther development team. This is one of the first (if not, the first) AR system to be examined in competition, and was used for the first time in a standard platform league. I was lucky to get a demo from Tom and it definitely added a new level of watching experience. It will be very interesting to see how the system develops.

MARK SETTINGS METAQUEST3 for using Augmented Reality System. Credit photo: Dr. Timothy Wiley.

MARK SETTINGS METAQUEST3 for using Augmented Reality System. Credit photo: Dr. Timothy Wiley.

From the main football area, I headed to the Robocupjunior zone, where Rui Baptist, a member of the Executive Committee, released a tour of the arenas and introduced me to some teams using machine learning models to help their robots. Robocupjunior is a competition for school children and is divided into three leagues: football, rescue and stage.

First I caught up with four teams from the rescue league. Robots identify the “victim” in the re -scenarios of disasters and differ in the complexity of watching lines on a flat surface to negotiating paths through obstacles to the Aven field. There are three different sources to the league: 1) Rescue line, where robots follow the black line that leads them to the victim, 2) rescue the maze where robots need to explore the maze and identify the victim, 3) rescue simulation, which is a simulated version of Maze.

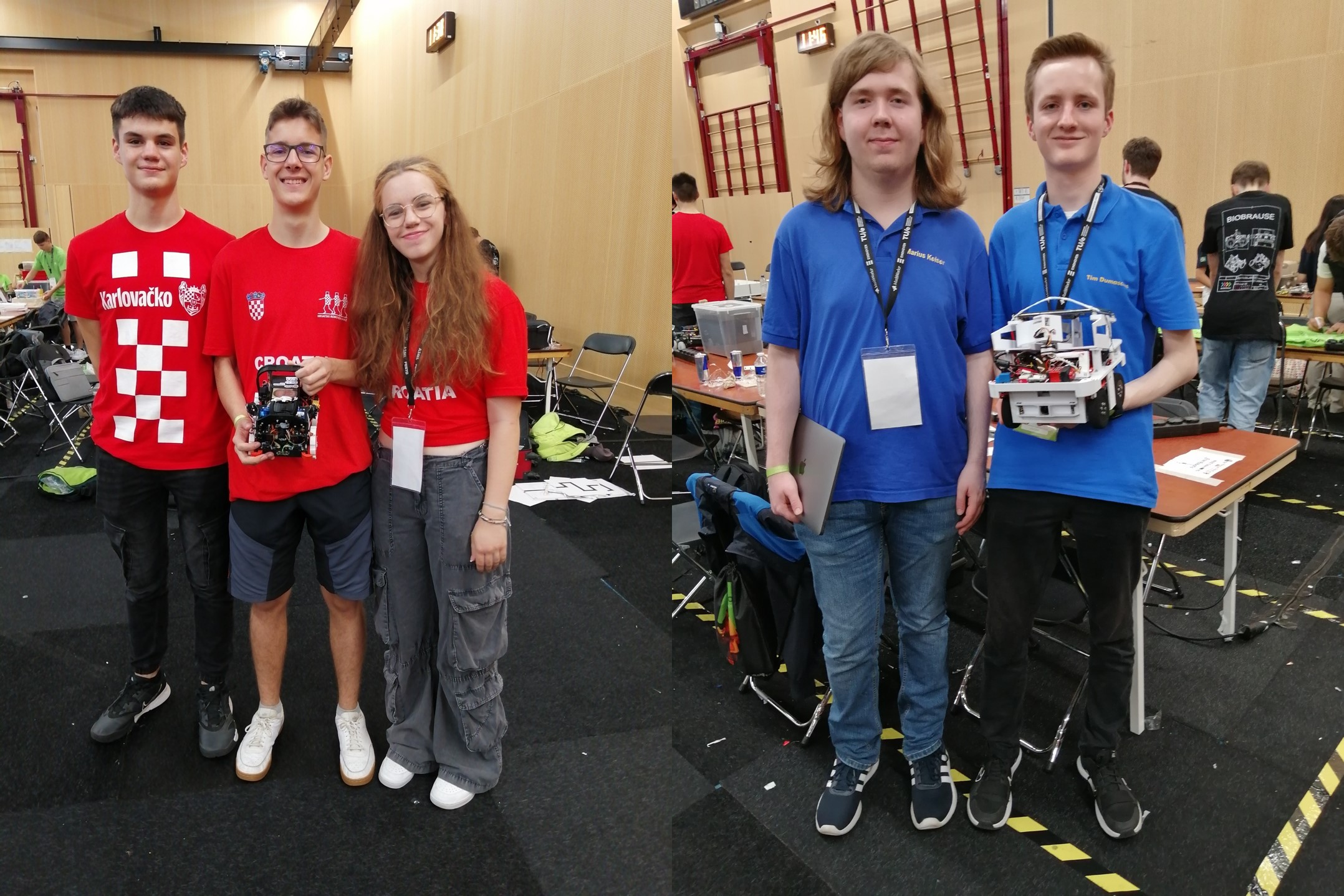

The Skollska Knjgia team, which participated in the rescue line, used a neuron network to detect victims in the Yolo V8 evacuation zone. The network themselves trained with about 5000 pictures. It also competes in the WRE Team Overregniering2. In this case, they also used neural networks Yolo V8 for two elements of their system. The first model was used to detect the victim in the evacuation zone AK cord detection. Their second model is used during the subsequent line and allows the robot to be detected when the black line (used for most tasks) turns into a silver line, denoting the evacuation zone.

Left: Team Skollska Knjgia. Right: Ovegeniering2 team.

Left: Team Skollska Knjgia. Right: Ovegeniering2 team.

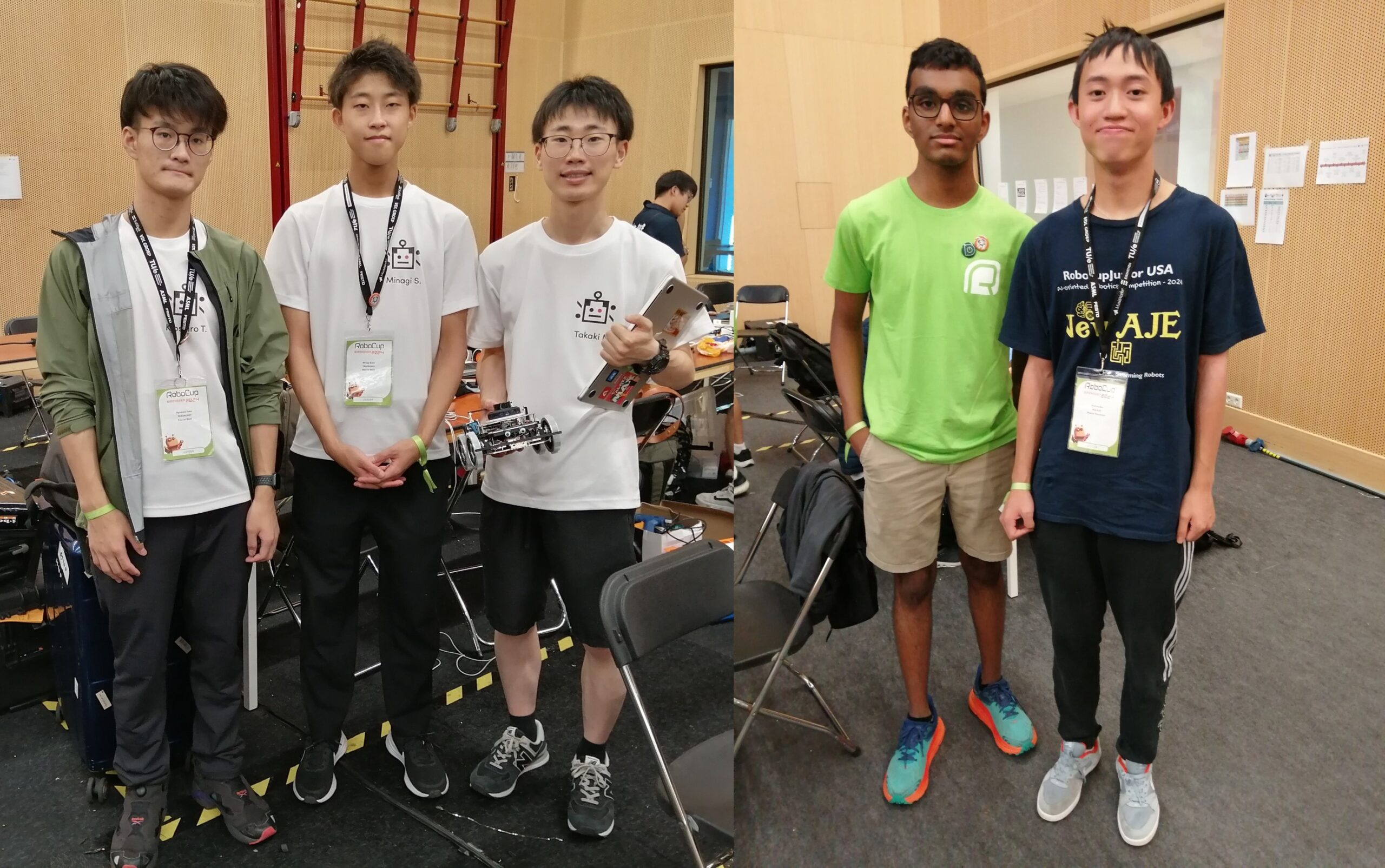

Tanorobo team! They participated in the Maze competition. They also used the machine learning model to detect victims, trained on 3000 photos for each type of victim (these are marked with different letters in the maze). They also photographed walls and obstacles to avoid incorrect classification. The New Aje team took part in the simulation competition. They used a graphical user interface to train machine learning and tuning their navigation algorithms. They have three different algorithms for navigation, with different computing costs that can switch depending on the location (and complexity) in the maze in which they are located.

Left: Team Tanorobo! Right: Team New andje.

Left: Team Tanorobo! Right: Team New andje.

I gave two of the teams that recently preceded the scene. The performance of the Medic team was based on a medical scenario, including two machine learning elements. The first is to recognize voice, for communicating with the “patient” robots, and the second is to recognize an image for classification of X -rays. The jam session robot reads in symbols of American sign language and uses them to play the piano. They used an algorithm to detect media pipes to find different points on the hand and classifiers of random forests to determine this symbol was displayed.

Left: Team Medic Bot Right: Team Jam Session.

Left: Team Medic Bot Right: Team Jam Session.

Another stop was the Humanoid League, where the last match took place. The arena was packed on the rafters with crowds who desire to see the action. A standing room, just to see the humanoids of the size of adults.

A standing room, just to see the humanoids of the size of adults.

The finals continue the middle-size league and the Tech Unithoven home team beat Bigherox with a convincing score of 6-1. Here you can watch the live stream of the last action.

The big final was the winner of Middle Size League (Tech United Eindhoven) against five Robocup administrators. People ran out of 5-2 winners, their superiors and move too much for Tech United.

AiHub is an unprofessional dedicated to the connection of the AI community with the public providing free, high quality information in AI.

AiHub is an unprofessional dedicated to the connection of the AI community with the public providing free, high quality information in AI.

Lucy Smith is an editor management for AiHub.